Late last month, the San Francisco-based startup HeHealth announced the launch of Calmara.ai, a cheerful, emoji-laden website the company describes as âyour tech savvy BFF for STI checks.â

The concept is simple. A user concerned about their partnerâs sexual health status just snaps a photo (with consent, the service notes) of the partnerâs penis (the only part of the human body the software is trained to recognize) and uploads it to Calmara.

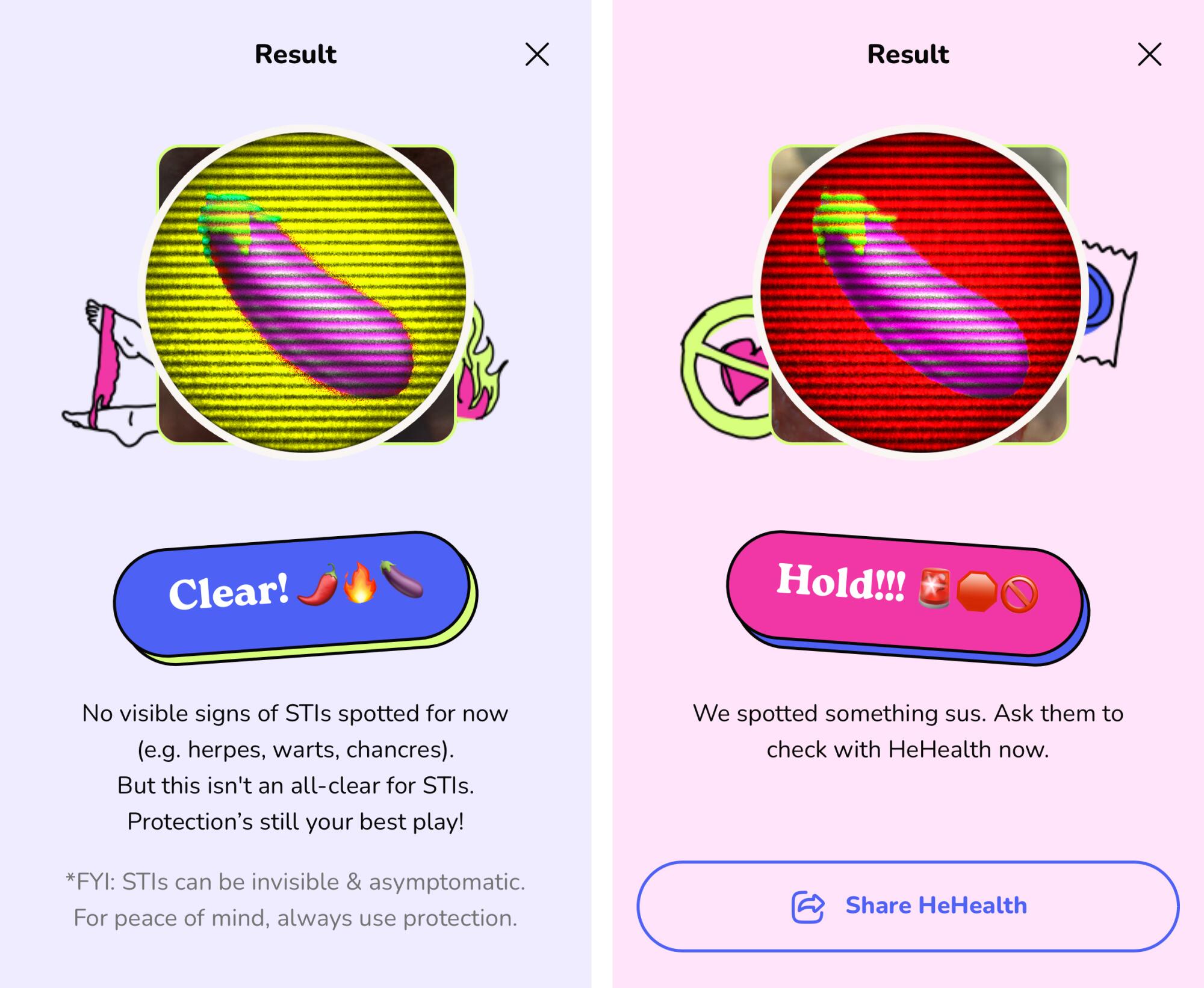

In seconds, the site scans the image and returns one of two messages: âClear! No visible signs of STIs spotted for nowâ or âHold!!! We spotted something sus.â

Calmara describes the free service as âthe next best thing to a lab test for a quick check,â powered by artificial intelligence with âup to 94.4% accuracy rateâ (though finer print on the site clarifies its actual performance is â65% to 96% across various conditions.â)

Since its debut, privacy and public health experts have pointed with alarm to a number of significant oversights in Calmaraâs design, such as its flimsy consent verification, its potential to receive child pornography and an over-reliance on images to screen for conditions that are often invisible.

But even as a rudimentary screening tool for visual signs of sexually transmitted infections in one specific human organ, tests of Calmara showed the service to be inaccurate, unreliable and prone to the same kind of stigmatizing information its parent company says it wants to combat.

A Los Angeles Times reporter uploaded to Calmara a broad range of penis images taken from the Centers for Disease Control and Preventionâs Public Health Image Library, the STD Center NY and the Royal Australian College of General Practitioners.

Calmara issued a âHold!!!â to multiple images of penile lesions and bumps caused by sexually transmitted conditions, including syphilis, chlamydia, herpes and human papillomavirus, the virus that causes genital warts.

But the site failed to recognize some textbook images of sexually transmitted infections, including a chancroid ulcer and a case of syphilis so pronounced the foreskin was no longer able to retract.

Calmaraâs AI frequently inaccurately identified naturally occurring, non-pathological penile bumps as signs of infection, flagging multiple images of disease-free organs as âsomething sus.â

It also struggled to distinguish between inanimate objects and human genitals, issuing a cheery âClear!â to images of both a novelty penis-shaped vase and a penis-shaped cake.

âThere are so many things wrong with this app that I donât even know where to begin,â said Dr. Ina Park, a UC San Francisco professor who serves as a medical consultant for the CDCâs Division of STD Prevention. âWith any tests youâre doing for STIs, there is always the possibility of false negatives and false positives. The issue with this app is that it appears to be rife with both.â

Dr. Jeffrey Klausner, an infectious-disease specialist at USCâs Keck School of Medicine and a scientific adviser to HeHealth, acknowledged that Calmara âcanât be promoted as a screening test.â

âTo get screened for STIs, youâve got to get a blood test. You have to get a urine test,â he said. âHaving someone look at a penis, or having a digital assistant look at a penis, is not going to be able to detect HIV, syphilis, chlamydia, gonorrhea. Even most cases of herpes are asymptomatic.â

Calmara, he said, is âa very different thingâ from HeHealthâs signature product, a paid service that scans images a user submits of his own penis and flags anything that merits follow-up with a healthcare provider.

Klausner did not respond to requests for additional comment about the appâs accuracy.

The AIDS Healthcare Foundation has been resisting a push from L.A. County to ask people if they have insurance that can cover costs for STD tests.

Both HeHealth and Calmara use the same underlying AI, though the two sites âmay have differences at identifying issues of concern,â co-founder and CEO Dr. Yudara Kularathne said.

âPowered by patented HeHealth wizardry (think an AI so sharp youâd think it aced its SATs), our AIâs been battle-tested by over 40,000 users,â Calmaraâs website reads, before noting that its accuracy ranges from 65% to 96%.

âItâs great that they disclose that, but 65% is terrible,â said Dr. Sean Young, a UCI professor of emergency medicine and executive director of the University of California Institute for Prediction Technology. âFrom a public health perspective, if youâre giving people 65% accuracy, why even tell anyone anything? Thatâs potentially more harmful than beneficial.â

Kularathne said the accuracy range âhighlights the complexity of detecting STIs and other visible conditions on the penis, each with its unique characteristics and challenges.â He added: âItâs important to understand that this is just the starting point for Calmara. As we refine our AI with more insights, we expect these figures to improve.â

On HeHealthâs website, Kularathne says he was inspired to start the company after a friend became suicidal after âan STI scare magnified by online misinformation.â

âNumerous physiological conditions are often mistaken for STIs, and our technology can provide peace of mind in these situations,â Kularathne posted Tuesday on LinkedIn. âOur technology aims to bring clarity to young people, especially Gen Z.â

Calmaraâs AI also mistook some physiological conditions for STIs.

The Times uploaded a number of images onto the site that were posted on a medical website as examples of non-communicable, non-pathological anatomical variations in the human penis that are sometimes confused with STIs, including skin tags, visible sebaceous glands and enlarged capillaries.

Calmara identified each one as âsomething sus.â

Such inaccurate information could have exactly the opposite effect on young users than the âclarityâ its founders intend, said Dr. Joni Roberts, an assistant professor at Cal Poly San Luis Obispo who runs the campusâs Sexual and Reproductive Health Lab.

âIf I am 18 years old, I take a picture of something that is a normal occurrence as part of the human body, [and] I get this that says that itâs âsusâ? Now Iâm stressing out,â Roberts said.

âWe already know that mental health [issues are] extremely high in this population. Social media has run havoc on peopleâs self image, worth, depression, et cetera,â she said. âSaying something is âsusâ without providing any information is problematic.â

Kularathne defended the siteâs choice of language. âThe phrase âsomething susâ is deliberately chosen to indicate ambiguity and suggest the need for further investigation,â he wrote in an email. âItâs a prompt for users to seek professional advice, fostering a culture of caution and responsibility.â

Still, âthe misidentification of healthy anatomy as âsomething susâ if that happens, is indeed not the outcome we aim for,â he wrote.

Users whose photos are issued a âHoldâ notice are directed to HeHealth where, for a fee, they can submit additional photos of their penis for further scanning.

Those who get a âClearâ are told âNo visible signs of STIs spotted for now . . . But this isnât an all-clear for STIs,â noting, correctly, that many sexually transmitted conditions are asymptomatic and invisible. Users who click through Calmaraâs FAQs will also find a disclaimer that a âClear!â notification âdoesnât mean you can skimp on further checks.â

Health experts say the over-the-counter birth control pill gives contraception options to people who live in rural or remote areas.

Young raised concerns that some people might use the app to make immediate decisions about their sexual health.

âThereâs more ethical obligations to be able to be transparent and clear about your data and practices, and to not use the typical startup approaches that a lot of other companies will use in non-health spaces,â he said.

In its current form, he said, Calmara âhas the potential to further stigmatize not only STIs, but to further stigmatize digital health by giving inaccurate diagnoses and having people make claims that every digital health tool or app is just a big sham.â

HeHealth.ai has raised about $1.1 million since its founding in 2019, co-founder Mei-Ling Lu said. The company is currently seeking another $1.5 million from investors, according to PitchBook.

Medical experts interviewed for this article said that technology can and should be used to reduce barriers to sexual healthcare. Providers including Planned Parenthood and the Mayo Clinic are using AI tools to share vetted information with their patients, said Mara Decker, a UC San Francisco epidemiologist who studies sexual health education and digital technology.

But when it comes to Calmaraâs approach, âI basically can see only negatives and no benefits,â Decker said. âThey could just as easily replace their app with a sign that says, âIf you have a rash or noticeable sore, go get tested.ââ

Halle Berry says her doctor was convinced she had the âworst case of herpesâ he had ever seen when she described symptoms related to perimenopause.